There’s a “study” (I refuse to say that word without air quotes) widely circulating that supposedly PROVES shock collars are more effective than rewards-based training at inhibiting chasing behaviours in dogs.

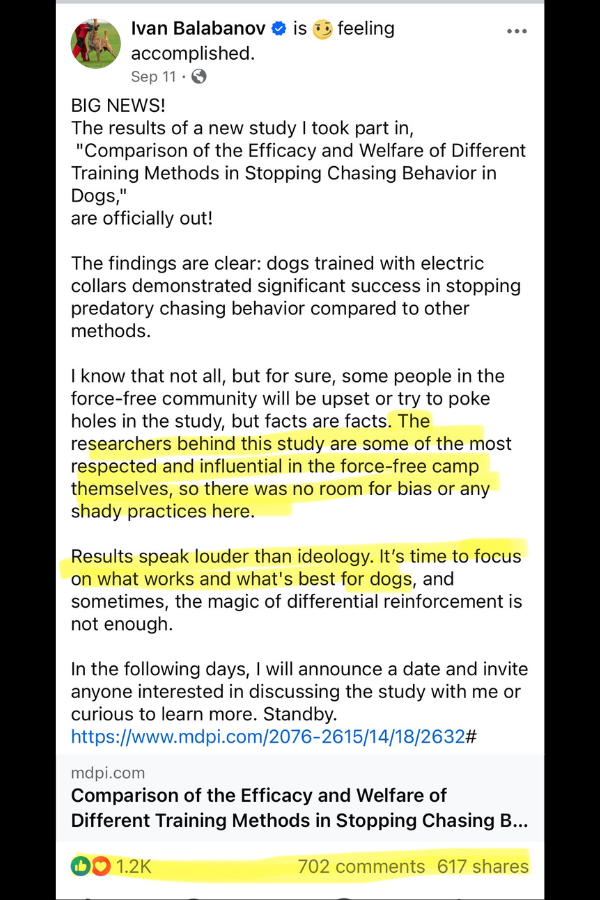

The lead trainer involved is claiming that “the findings are clear”, that “it’s time to focus on what works and what’s best for dogs”, and that “the researchers behind this study are some of the most respected and influential in the force-free camp themselves, so there was no room for bias or any shady practices here”.

[When someone feels the need to tell me upfront there were no shady practices, I automatically assume there was shade. Anyone else?]

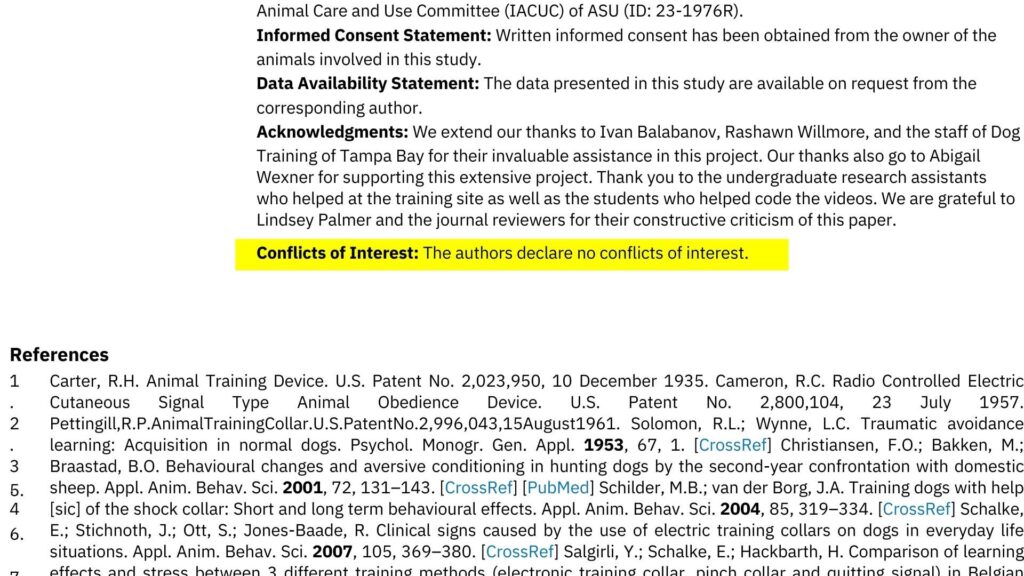

The authors of the paper, Anamarie Johnson and Clive Wynne, declared no conflict of interest, suggesting that this is truly a non-biased and properly conducted study.

But was it?

Unfortunately most people do not read past the study’s headline and abstract, which in this case seems to highly suggest that e-collar training is vastly superior with minimal downsides. Was that actually shown though?

I personally feel this study is so biased and improperly done that it warrants an ethics review and retraction. I don’t say those words lightly.

I’m going to explain why, but I also encourage you to read the paper for yourself HERE.

Stopping chasing behaviors (or prey drive training) is particularly close to my heart because it been my biggest challenge with my own dog Neirah. She is a corgi-heeler cross who has a history of chasing, catching, and killing animals. I successfully trained her to stop chasing using exclusively reward-based training, and have since taught many other dogs the same. (Tutorial video HERE).

I know first-hand how successful force free training can be for inhibiting chasing behaviours so I don’t want to see a poorly done study such as this one used as justification for shock collar training. Let’s dive in!

If you prefer video content, you can also watch my review here:

What’s the difference between punishment and rewards based dog training?

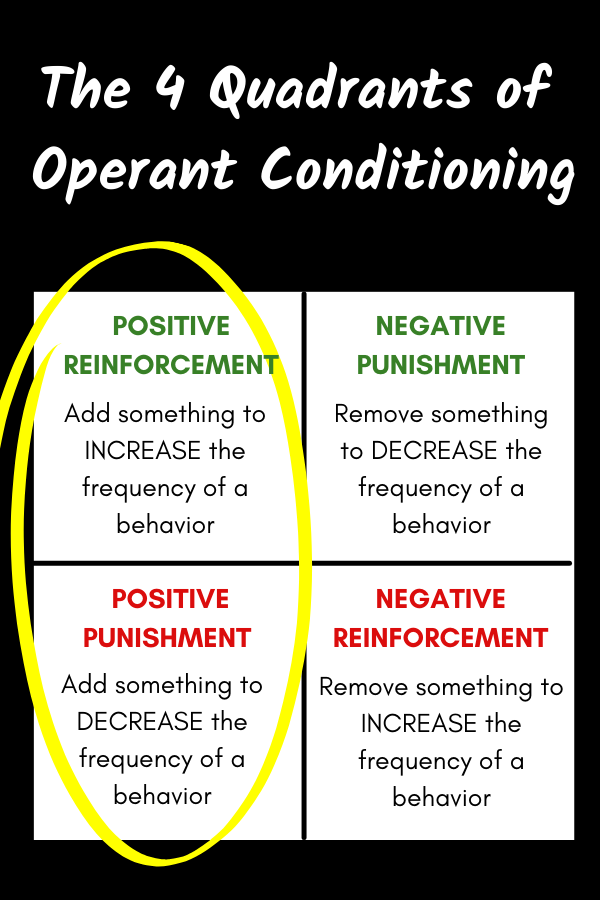

I want to give you a brief review of how both punishment and rewards-based training methods can be used to elicit behaviour change in dogs. This particular study used the addition of shock collars as punishment and treats as reinforcers. So, what does that mean?

Positive punishment is the addition of something that the dog dislikes (such as a shock collar, spray bottle, choke chains, etc) to decrease the frequency of an undesirable behaviour.

Positive reinforcement is the addition of something enjoyable (each dog’s preferences are different, but this could include things like affection, praise, play, or food rewards) to increase the frequency of a desired behaviour.

Let’s pretend that we’re trying to teach a dog to walk nicely on leash:

If you were leash training, you could use a leash correction each time that the dog pulls on leash to punish them for pulling and to decrease the chances of them pulling again. Or, you could offer a treat reward when they’re walking nicely beside you to reinforce the desired behaviour and make it more likely that the dog will repeat that.

Arguably both methods will work, but only if the dog clearly understands why they received the punishment or the reward.

Proper timing and administration of the reward or punishment is necessary if you want to successfully change a dog’s behaviour.

This basic, simple requirement for training success is incredibly important to keep in mind as we go through the study because only one group actually had that need met.

Okay, let’s jump into the study!

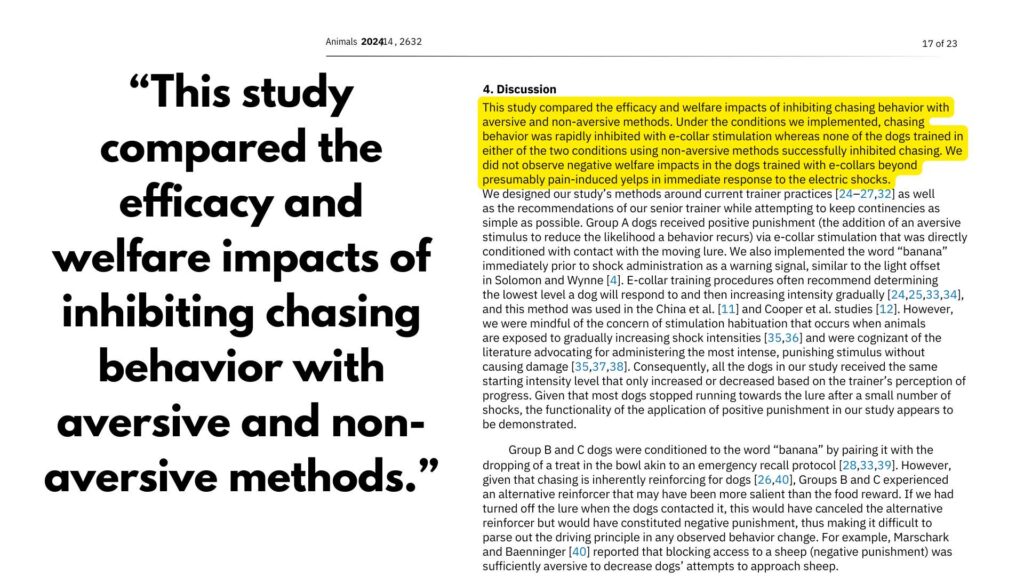

As the authors stated “This study compared the efficacy and welfare impacts of inhibiting chasing behavior with aversive and non-aversive methods”.

Let’s tackle the welfare results first:

The Oxford definition of welfare is “The health, happiness, and fortunes of a person or group”.

An accepted definition of animal welfare is that “Welfare comprises the state of the animal’s body and mind, and the extent to which its nature is satisfied”.

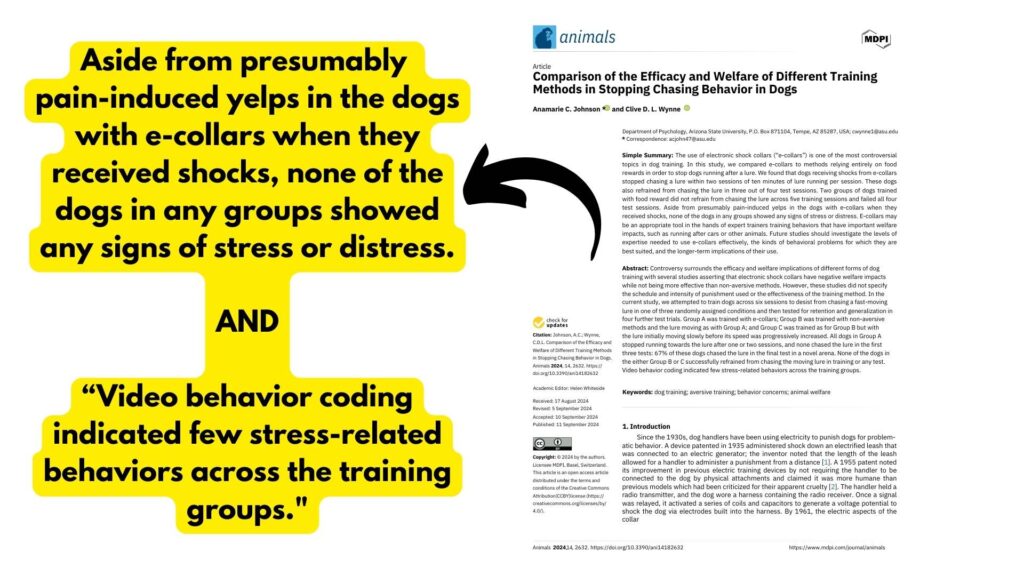

This particular study made the bold statements on page 1 that “Aside from presumably pain-induced yelps in the dogs with e-collars when they received shocks, none of the dogs in any groups showed any signs of stress or distress.” and “Video behavior coding indicated few stress-related behaviors across the training groups.“

First, I would like to point out that a dog yelping in pain already IS a welfare issue.

I would also argue that this is very misleading wording because it implies that welfare wasn’t otherwise affected. In actuality, they didn’t successfully track anything else that would be indicative of welfare or stress during this study, which I will show.

This study seemingly meant to track welfare in two ways: 1) by coded video analysis of the dog’s actions and behaviors, and 2) by fecal cortisol levels. (page 9)

Let’s talk about cortisol first.

The study acknowledges that “The use of cortisol levels as stress indicators has been questioned because of the range of external variables that can confound results” (page 18). However, the study used it as their measurable marker anyways.

As the study states “Since peak fecal cortisol is observed 24 hours after simulation, the levels obtained on a given day reflect cortisol levels from the day prior”. Therefore they treated the samples collected on day one of training as a reflection of the dog’s baseline prior to arriving at the training facility, the sample collected on day two as a reflection of the stress on day one, and so on.

If a measurable marker is going to be used to suggest differences between groups due to a specific intervention, an appropriately done study would keep all other conditions except that intervention the same across groups (or at minimum consistent with a dog’s baseline).

In a study like this one, that would mean that outside of the very brief training sessions the dogs received each day, they would need to keep conditions consistent so they could truly attribute the differences in daily cortisol to the training itself.

This basic requirement was failed to be met and no information was provided about what the dogs experienced each day outside of their very brief training sessions.

Did the dogs go to the vet? Did they have long car rides to the training centre? Do they live outside, and if so how was the weather? Were other punishment methods used at home? Does the dog have underlying health issues? Any other stressful behaviours that may have been triggered such as leash reactivity?

By failing to keep other potential stressful events consistent during the testing period, the study could never claim that the differences in a potential stress marker were exclusively due to different training methods.

However, this doesn’t even matter because not enough samples were taken anyway.

Fecal samples were only opportunistically collected, and the authors admitted that of the dogs included in the final test results they were only able to obtain daily samples from: 2 dogs in group A, 1 dog in group B, and 4 dogs from Group C, so a total of seven dogs. (page 12)

Unsurprisingly with that few of dogs actually sampled, no significant differences could be found between the sample groups. The authors admitted that “Because not all dogs provided a sample on all days, the sample size available here is too small to allow significant conclusions.” (page 18)

Essentially, the results of the fecal cortisol samples that were obtained during this study were unusable for any sort of stress comparison between training methods.

Next let’s look at the coded video analysis of the behaviors that the dogs exhibited as a comparison of stress.

The author stated that “The typically fast-moving activity of the dogs in this study made it impossible to code more subtle behaviors that have been reported as indicative of stress in previous studies such as lip licking, ear position, and body posture.” (page 18)

Essentially low-level stress signals were not measured at all.

What the authors did note however was quote “All dogs yelped on shock stimulation in our study with only scarce occurrences of yelping for a few dogs in the other groups”.

The authors also note that “Vocalizations, particularly yelping, are a common metric of pain and distress” and “This is consistent with Schilder and Van Der Borg’s claim that e-collar shocked dog dogs experience some level of pain”.

I found this to be a particularly impactful admission from the authors since a common sentiment is that “shock collars don’t hurt in skilled hands”. This study used supposedly expert trainers, and yet 100% of the dogs still yelped in pain.

So: 1) All shock collar dogs experienced pain during training and 2) No other welfare testing was successfully done throughout the study.

So, how did the authors interpret their data?

I’ll remind you that the authors takeaways on page 1 were “Aside from presumably pain-induced yelps in the dogs with e-collar when they received shocks, none of the dogs in any groups showed any signs of stress or distress.” and “Video behavior coding indicated few stress-related behaviors across the training groups.”

Their wording feels intentionally misleading since other markers weren’t ACTUALLY studied.

I feel that the only welfare claim that the authors can make from their data is that shock collars hurt. However, is this pain worth improved results?

As the authors mentioned “Painful experiences during training are not the worst possible consequences for a dog with behavior incompatible with human cohabitation. Problem behavior is one of the major causes of a relinquishment of family dogs to animal shelters, and chasing behavior can have deadly consequences.” (page 19)

The authors seem to be implying that it might be better to hurt dogs rather than have them die?

So, were the results so shockingly different (pun fully intended) that they can actually make that claim and say that the pain is worth it? Let’s look at the methods and results!

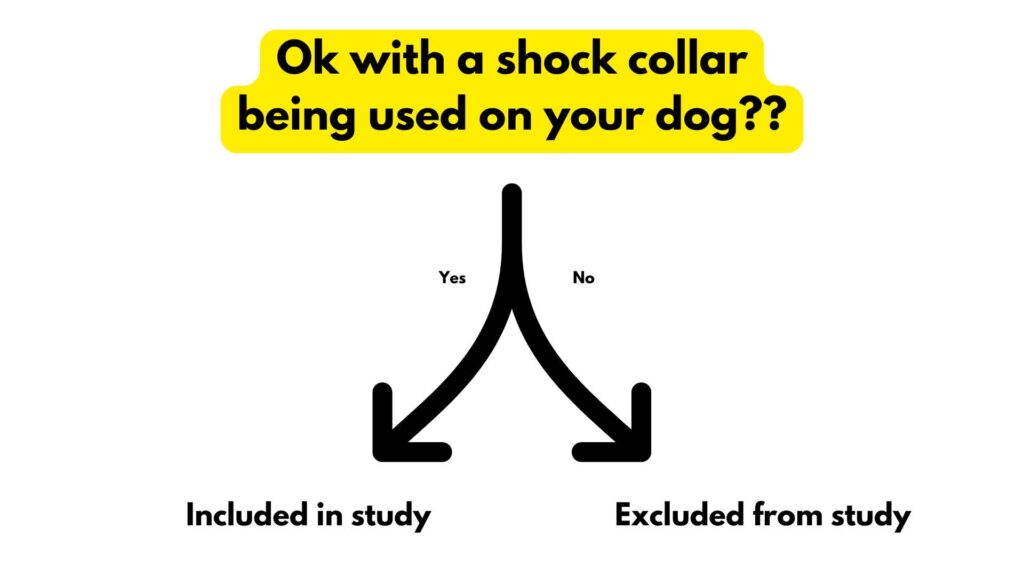

30 dogs were recruited for this study from an online survey that was shared on social media (page 5). The study didn’t explicitly state whose social media these dogs were recruited from, but I have seen many uncontested claims that it was the shock collar trainers’ platforms. If true, this makes the sample and the participants completely biased.

In order to participate in this study, the dogs guardians also needed to be okay with shock collar use on their dog, so that already biases who would have applied.

In order to be included in the study the dogs also had to prove a huge desire to chase a lure. On day one the dogs were given 20 minutes to interact with the lure, then they were given a two+ hour break, and then they were tested again to see if they’d want to chase the lure in a second session. Only 19 of the 30 dogs did so, and those 19 made up the studies small sample size.

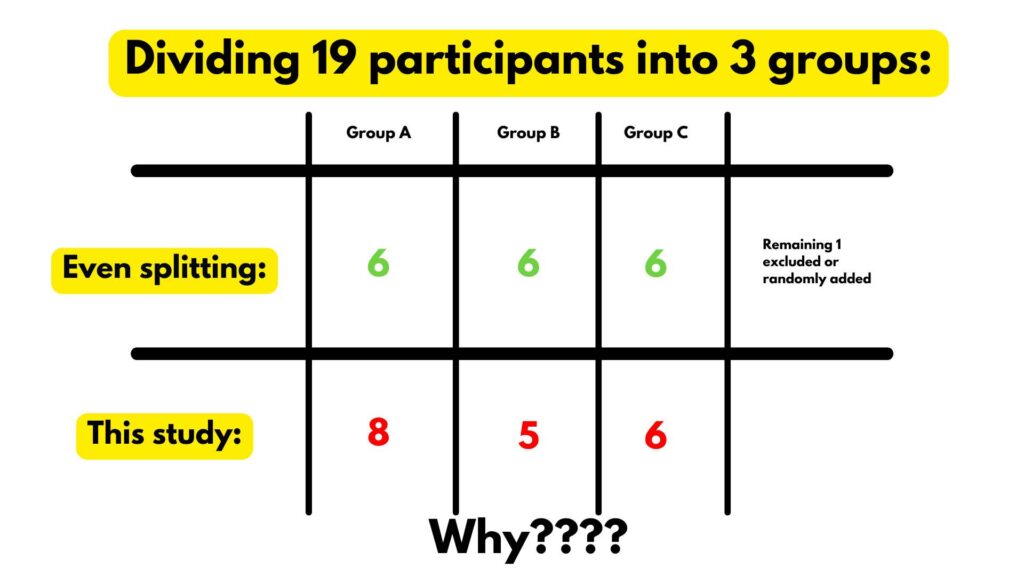

Next, these 19 dogs were supposedly “randomly assigned” to three different groups. (Page 6)

I want to point out that proper studies don’t just say “we divided the subjects randomly”. Instead, they would explicitly say how this randomization occurred.

Were numbers picked from a hat? Were groups computer generated? This needs to be stated within the study write up.

It should also be noted that there wasn’t an even splitting of participants into the three groups.

Instead of an even splitting, there were:

- 8 dogs assigned to group A, which were trained with a shock collar and a high-speed lure

- 5 dogs were assigned to Group B, which was supposedly trained with treat rewards and a high speed lure

- 6 dogs assigned to group C, which was supposedly trained with treats and a progressive lure speed

No explanation was provided as to why the supposed randomization resulted in three uneven groups.

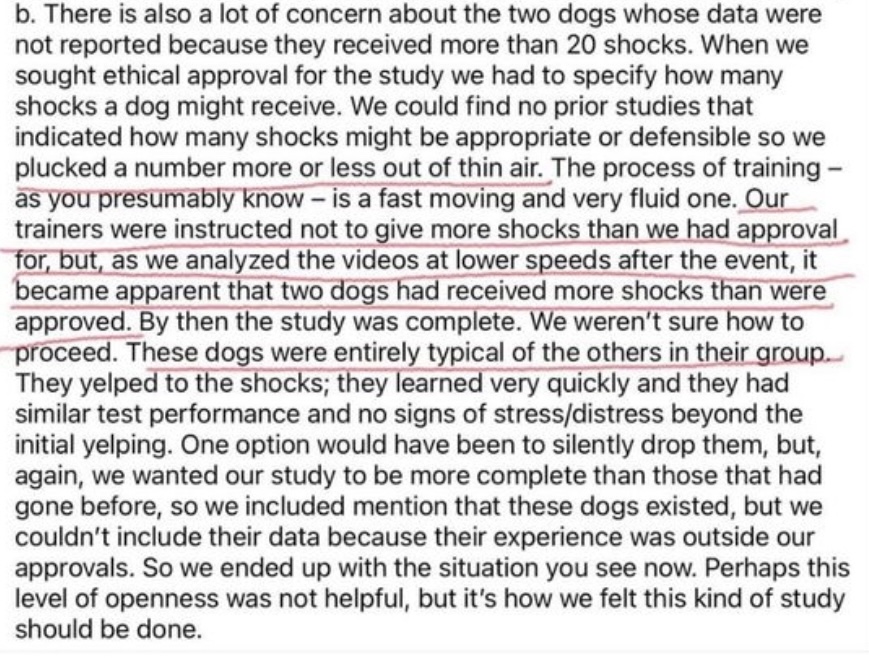

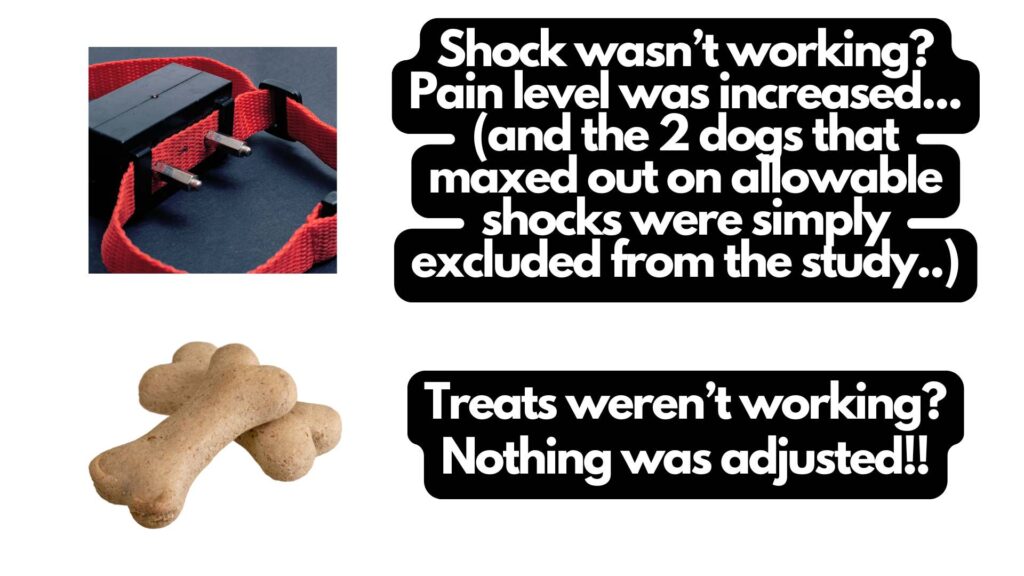

It should also be noted that two of the shock collar dogs were later removed from the study because they were shocked in EXCESS of the 20 times that the Institutional Animal Care and Use Committee (IACUC) of Arizona State University ethically allowed during this study. This left only 17 dogs that were actually analyzed.

One of the study authors Clive Wynne has since addressed this on social media saying:

“There is also a lot of concern about the two dogs whose data were not reported because they received more than 20 shocks. When we sought ethical approval for the study we had to specify how many shocks a dog might receive. We could find no prior studies that indicated how many shocks might be appropriate or defensible so we plucked a number more or less out of thin air. The process of training – as you presumably know – is a fast moving and very fluid one. Our trainers were instructed not to give more shocks than we had approval for, but, as we analyzed the videos at lower speeds after the event, it became apparent that two dogs had received more shocks than were approved. By then the study was complete. We weren’t sure how to proceed. These dogs were entirely typical of the others in their group.They yelped to the shocks; they learned very quickly and they had similar test performance and no signs of stress/distress beyond the initial yelping. One option would have been to silently drop them…”

I have NEVER heard a researcher ponder “silently dropping” data points and was shocked to read that, particularly when discussing an ethics breach.

Were the trainers not being monitored to ensure the dogs WERE treated ethically (which they admit was already a number plucked out of thin air) during the study as approved by the IACUC?

Apparently not 🙁

However, let’s address this supposed lack of impact on results. The authors claim that removing the two over-shocked dogs wouldn’t have impacted overall study conclusion. How can this be true?

Presumably if the dogs were responding to the punishment intervention (and were no longer chasing the lure), then they wouldn’t have needed to shocked beyond the 20 times permitted, correct?

Yet they were shocked in excess of this.

This implies they actually were NOT responding to the punishment intervention assigned to them since they had to be punished more than was approved in the study design.

And yet in the study abstract (page 1), which is commonly the most read section of a paper, the authors state “dogs receiving shocks from e-callers stopped chasing a lure within two sessions of 10 minutes”. They also stated in the conclusion on page 20 “We found that dogs trained by professional expert trainers with aversive e-collar stimulation successfully inhibited chasing a lure in a 5-day study of training”.

These statements imply a 100% success rate from shock collars during training, however they left out that 25% of the dogs in that intervention group had to be excluded since they didn’t respond to the intervention and they were shocked too many times to be included in the data set.

If these dogs that weren’t responding to the maximum number of shocks allowed during the study were included in their results, would these main takeaways still be true?

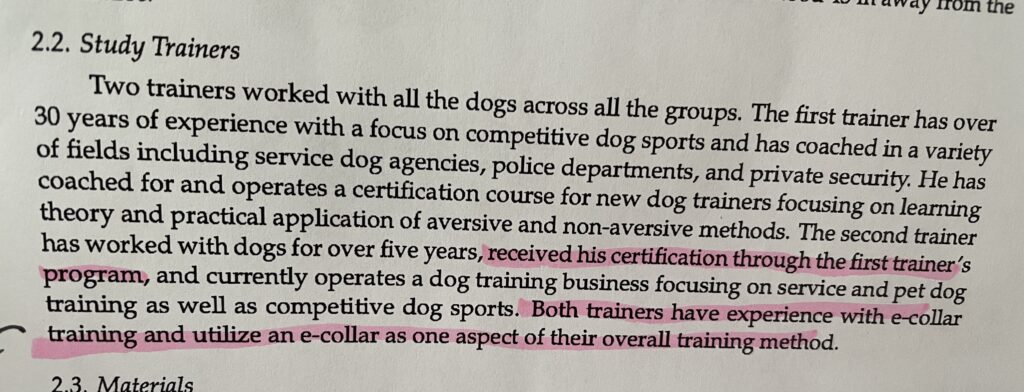

Let’s move on and look at the two dog trainers included in the study next (page 5).

It’s incredibly important to point out that NO reward-based trainer was included in this study.

Instead, all three groups of dogs were trained by two shock collar trainers, one of which received his certification from the other.

One of those trainers in particular is also extremely vocal online about the necessity of using aversive methods such as shock collars to train a dog. Therefore he would have a vested personal interest in wanting the shock collar group’s results to appear better than the rewards group.

In a study that was supposedly comparing results in efficacy and welfare between aversive and reward-based training methods, wouldn’t one would assume that a skilled reward-based trainer would have been involved in handling the dogs rather than just including two shock collar trainers?

Consider that for a second: This study 1) Only used trainers that loudly and publicly advocate for shock collar use and would therefore have a vested personal interest in skewing the results 2) The study was conducted at one of those trainers own facilities 3) The participant group was seemingly chosen from those trainers social media platforms, and 4) the training methods used in the study were developed with recommendations from that trainer (as stated by the authors themselves on page 17: “the recommendations of our senior trainer:”).

Despite all of that, the authors still somehow declare NO CONFLICT OF INTEREST?!

If that’s not the set up for an incredibly biased study, then I honestly don’t know what is.

However, let’s pretend that that didn’t happen, same as the study did, and again move on.

Training set-up:

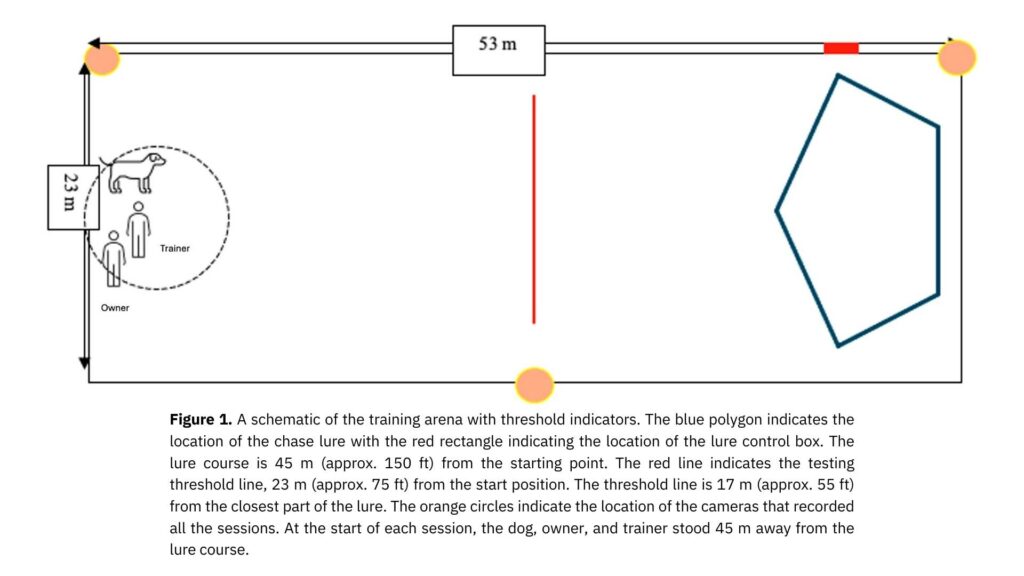

A 53m by 23m plastic fenced area was created for the study. During training the dog, the dog’s guardian, and the trainer would start on one side of the arena that was about 45m (or 150 ft) from the mechanical lure.

The study mentions that the lure could be stationary or move at low, medium, and high speeds. However I couldn’t find any reference to what those speeds actually were.

For the training, “all dogs were exposed to the word banana as a signal to stop moving towards the lure. Banana was selected as it likely had no prior association for the dogs in terms of a training cue”. I think that was probably one of the only fair parts of the study.

Now let’s dive into the training that each group received.

Group A was the shock collar group. Despite the authors stating that “E-collar training procedures often recommend determining the lowest level that a dog will respond to, and then increasing intensity gradually” (page 17) it was decided to start all dogs on a shock level of 6 out of 10. It should be noted again that during training 100% of the dogs in group A yelped in pain. Therefore, we certainly know that shocks were at a level that would definitely be considered punishing to the dogs.

Initially the dog, its guardian, and the trainer stood at one side of the arena and then the lure was started at its highest speed. When the dog ran and contacted the lure, a shock was immediately delivered as the trainer said banana. The study mentions that “contact and proximity to the lure were important to classically condition the dogs to associate contact with the lure to receiving a shock, as well as pairing of the novel word banana”.

Interestingly, in the later rewards groups you’ll see that this careful pairing to make sure that the dog understood that the action and the outcome were associated was not done.

During training of group A if the dogs continued to pursue the lure they were shocked again at a level up to 10 out of 10, fully at the trainer’s discretion.

As the training sessions continued for group A and the dog stopped running at high speeds towards the lure, the trainer began saying banana without a shock as the dog approached the threshold line, and then shocked the dog if it didn’t move away from that line.

Another distinction worth noting is that the dogs in group A received six total training sessions over the course of 3 days, whereas the dogs in groups B and C only received five. This is 17% less.

Now let’s talk about Group B that was supposedly trained with treat rewards and a high speed lure.

The goal during the first session was to “associate the word banana with the presentation of a high value treat”. Unlike group A where they specified exactly what punishment level was utilized, the authors didn’t include here what the high value reward actually was. I was immediately curious as this would have a massive impact on results.

The analogy that I often give people is that they would be much more motivated to work for $100 than they would be for $1. If the dogs were given the equivalent of a $1 reward versus a $100 one, that would vastly impact their desire to work or perform the desired behavior.

The authors admitted (page 19) “We did not test whether the food rewards we deployed were highly valued” as well as “We asked owners to provide their dogs highest value treats, but it would have been interesting, had time permitted, to have carried out a preference assessment with a range of food.”

Essentially, the researchers had no idea if the treats being used actually were high value to the dogs like they claimed in the methods.

It’s also worth noting that the treat value did not change throughout the training regardless of how the dog responded to it, whereas with the punishment-based training the severity was increased if the dog did not respond as desired.

This is a massive bias if only one group’s intervention level changed based on intervention response.

Lastly before we go into training specifics, I want to point out that for dogs that are inclined to chase, the act of chasing is a massive reward, often more powerful as a reinforcer than any food would be. The authors do mention this in the discussion where they say “It is possible that our choice of food as a reinforcer was inappropriate…. given that the dogs tested and trained were highly motivated to chase the lure as demonstrated in day one exposure, a reinforcer that related more closely to chasing behavior, such as an opportunity to chase a toy like a ball or play with a flirt pole, might have been more successful.”

When I was training my own dog not to chase animals, I myself needed to start with a fur toy (my favourite brand is linked HERE) as a reward. Despite normally being food motivated, treats held no value for her during prey drive training. I was only able to switch from fur toys to treat rewards once the behavior was already proofed.

The authors recognizing that not including toys in this study as an error afterwards rather than as a consideration during the study design further shows me how important it would have been to include a rewards-based trainer if they actually wanted a fair comparison between methods.

In this study the punishment group used something that was certainly punishing (shown by yelping), but the rewards-based group didn’t necessarily use something that the dog even WANTED.

Now let’s jump into how the “rewards based training” was actually done.

The dog, the dog’s guardian, and the trainer stayed in a X-pen at the starting area of the arena. The lure was present but it was stationary. For five two-minute sessions, every 5 seconds the trainer would say banana and drop a treat into a metal bowl, seemingly regardless of the dog’s proximity or whether the dog had actually consumed that treat.

The study suggested that this was done to build an association between the word banana and a treat, and they mentioned it as an approximation of recall training. However, I’ve never heard of recall being trained this way.

Normally with positive reinforcement training, the treat is offered AFTER the dog successfully does a desired behaviour. During recall that would mean that after the dog responds to their recall cue and they return, then the reward would be presented. In this study the dog did not need to do any specific action after hearing “banana” for the trainer to drop the treat in the bowl.

Instead of teaching recall, it seems more like they were trying to condition “banana” to be a marker word.

We condition marker words by immediately offering the dog a treat (or other reward) after saying a specific word. Once conditioned, marker words are then used to promise the dog a reward for doing a desirable behaviour. However, in proper conditioning of marker words the dog needs to receive the reward right after hearing that word. It’s unclear whether that actually happened during this study.

Even the authors seem apprehensive about this method as they note (page 18) “Indeed in a more ideal setup conditioning sessions would have lasted over several days, possibly with a longer delay between each vocalization of banana and the presentation of food. A more complete design would have included tests of effectiveness of the food reward and whether the dogs indeed learned the paired association between saying banana and delivering food.”

Basically, they had no idea whether an association between banana and treat was actually formed for groups B and C.

For this review, let’s pretend that the pairing did work and that banana was loaded as a marker word where the dog knew that hearing “banana” means “a treat is on the way for what you just did”.

Rewards-based trainers will frequently use a marker word to mark the precise moment in time that a dog completes a desired behaviour that we want them to repeat. If the marker word is spoken right after the dog performs a desired behaviour, then it tells the dog which behaviour needs to be repeated in order to get another reward.

In essence, marker words encourage dogs to repeat whatever they did right before they heard that word.

So, how did the trainers in this study use the apparent marker word banana? Let’s take a look.

During training the lure was started at high speed. “If the dog ran towards the lure, the moment the dog crossed the pre-determined threshold line, the trainer said banana and dropped a treat in the metal bowl at the starting point 23m away. If the dog continued to run towards the lure, the trainer continued to say banana every 5 seconds on average until the dog responded.” (Page 7-8)

Wait, WHAT?!?

During group A’s training, the punishment marker (the shock) was administered while the dog was chasing the lure. As discussed, for punishment to work for behavior change it needs to be administered while the dog is doing the undesirable behavior, so this somewhat makes sense.

Also as discussed earlier, for reinforcement or rewards-based training to work, the reward needs to be administered or promised while the dog is doing or has completed the desirable behavior.

Hilariously, the study seemingly did the exact OPPOSITE and promised the dog a reward by saying banana while the dog did the undesirable behavior of lure chasing.

To me this seems to be the equivalent of promising a dog a treat while they pull on leash and thinking that somehow they’ll learn to pull less frequently. It makes absolutely no sense.

At best the trainers attempted to use the word banana as a treat bribe to bring the dog back to them, but it appears that they were accidentally reinforcing the behaviour that they were supposedly trying to decrease.

It should come as zero shock (pun still intended) that the treat rewards groups continued to chase the lure during the tests, while the shock collar group showed better results.

Rewarding the desired behavior is the entire essence and most basic requirement of reward-based training, and even that wasn’t met in this study.

In my opinion, since the study didn’t include ANY examples of proper rewards-based training for chasing behaviours, it certainly cannot be used to make any claims about the differences in efficacy between punishment or rewards-based training (despite the authors still doing so).

If you think that I’ve been overly harsh in my review and that the study couldn’t have been that bad since it was “published in a journal”, then I encourage you to Google MDPI predatory journal and decide for yourself.

So, what can we actually conclude from this study?

1) That shock collars cause pain, as shown by 100% of the shock collar group doing “pain- induced yelping” and

2) that, unsurprisingly, rewards-based training doesn’t work when it’s done incorrectly.

Let me ask you a silly question: if you used a vacuum upside down, would it work?

Obviously not.

If you blatantly use something incorrectly, it will fail.

Just like it’s not the fault of the vacuum for “failing” when used upside down, it’s also not the fault of a training method for “failing” when it’s used completely backwards.

Yet, this study seems to suggest just that.

I would laugh at how terribly this study was done if it didn’t have potential welfare effects for many, many dogs.

Dogs are sentient beings. They FEEL. Terrible research like this has the ability to do a lot of harm by suggesting that rewards-based training doesn’t work and that shock collars don’t have considerable welfare effects. It misleads dog guardians.

I’m genuinely shocked (third and last pun, I promise) that researchers, particularly ones that claim to care about the welfare of dogs, put their names to such a biased & inappropriately executed “study”.

Disclosure: Happy Hounds uses affiliate links. Purchasing with these links will not cost you any extra, but I get commissions for purchases made through these links. Affiliate links help me to continue to offer free resources & blog posts. I would love if you used them!

+ show Comments

- Hide Comments

add a comment